Our team had avoided this for a while but some unknown changes meant the Airflow MWAA requirements install could no longer install packages from PyPi (option 1) and we had to finally solve building the plugins.zip file to include all of the WHL packages needed (option 2). Amazon support had also strongly suggested option 2 anytime we had issues, which are all detailed here: https://docs.aws.amazon.com/mwaa/latest/userguide/best-practices-dependencies.html.

Taking lessons from creating a build process for our lambda layer, I found steps that got me to a point of a working plugins.zip + requirements.txt file.

NOTE: I could not get the mwaa-local-runner process to work on my Windows machine… this is always presented as the “easy” solve to troubleshoot this.

There are two requirements.txt files.

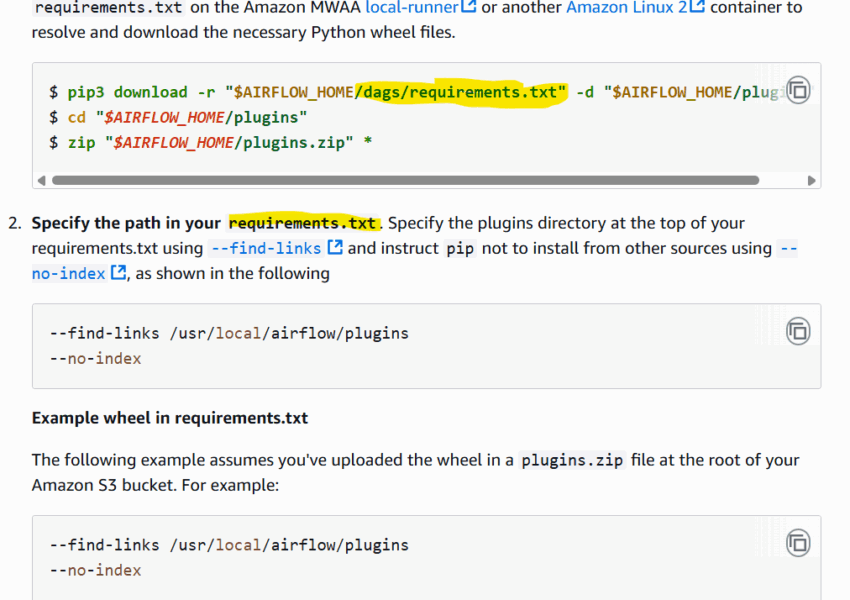

Notice in the above image they reference a /dags/requirements.txt file and then the MWAA configuration requirements.txt file. I went forward assuming their usage as:

- This

/dags/requirements.txtis the requirements file to actually specify all of the required libraries and dependencies that need to be downloaded and included into a plugins.zip - The

requirements.txtfile that is used in MWAA configuration for Airflow specifies the required libraries that will get pulled locally from your extracted plugins.zip file at/usr/local/airflow/plugins

I haven’t decided how to manage these – if they could be the same except for --find-links and --no-index directives or if it’s best to omit versions.

Use the lambda build process as a model

https://docs.aws.amazon.com/lambda/latest/dg/python-package.html#python-package-native-libraries

The python version, and platform used were important to get a compatible set of downloaded WHL files. When using pip with the --platform target this began to give me meaningful output in regards to dependency mismatches. You should also build this on a targeted version of python – either a venv, Docker image or potentially the --python-version flag for the pip command.

pip3 download -r dag_requirements.txt -d reqs --platform=manylinux2014_x86_64 --only-binary=":all:"

Run fail, modify, repeat (locally)

Our requirements.txt file that had been working had just the bare minimum airflow providers:

apache-airflow-providers-snowflake==5.5.1

apache-airflow-providers-openlineage==1.8.0

snowflake-connector-python==3.10.1

snowflake-sqlalchemy==1.5.3

openlineage-python==1.16.0

openlineage-python[kafka]

apache-airflow-providers-common-sql==1.14.0

You’ll begin to get output like this:

$ pip3 download -r dag_requirements.txt -d reqs --platform=manylinux2014_x86_64 --only-binary=":all:"

ERROR: Cannot install apache-airflow-providers-snowflake because these package versions have conflicting dependencies.

The conflict is caused by:

apache-airflow 2.10.5 depends on python-nvd3>=0.15.0

apache-airflow 2.10.4 depends on python-nvd3>=0.15.0

...This was the best explanation I found about this type of error: https://github.com/aws/aws-mwaa-local-runner/issues/257, the actual issue is there’s not a compatible WHL build available for the dependency and version(s). I started following these steps to resolve these errors:

- Determine the desired version of this library needed for your version of airflow. The constraints file(s) was the best resource for this:

- https://raw.githubusercontent.com/apache/airflow/constraints-{Airflow-version}/constraints-{Python-version}.txt

- Review guide under “Add a constraints statement” in the MWAA docs, option 1 (pypi sourced install) section 3.

- Go to the pypi page for the library (ex: python-nvd3: https://pypi.org/project/python-nvd3/)

- Check release history for the desired version

- Go to: Downloaded Files – find the source distribution

.tar.gz. Add this URL to your dag/requirements.txtfile.

Based on our dependencies, I ended up including 4 libraries this way:

https://files.pythonhosted.org/packages/79/80/dc4436a9324937bad40ca615eaccf86a71e28222193cd32292f0c30f2fb6/google_re2-1.1.20240601.tar.gz

https://files.pythonhosted.org/packages/6f/a4/691ab63b17505a26096608cc309960b5a6bdf39e4ba1a793d5f9b1a53270/unicodecsv-0.14.1.tar.gz

https://files.pythonhosted.org/packages/c7/11/345f3173809cea7f1a193bfbf02403fff250a3360e0e118a1630985e547d/dill-0.3.1.1.tar.gz

https://files.pythonhosted.org/packages/54/e7/2a0bf4d9209d23a9121ab3f84e2689695d1ceba417f279f480af2948abef/python-nvd3-0.16.0.tar.gzTest in Airflow

The WHL files can just be zipped into plugins.zip and uploaded to s3, then selected in your MWAA config. Each update of this file version will restart airflow and trigger a requirements install.

- Review your Airflow webserver logs (set the log level to INFO as well)

- Usually this will be Cloud Watch > Log Groups > airflow-[name]-WebServer > requirements_install…

- Review any error messages

From these messages you can begin to add the additional dependent libraries to your requirements.txt, example:

[INFO] – snowflake-connector-python 3.10.1 depends on pyOpenSSL<25.0.0 and >=16.2.0

2025-04-29T22:53:37.406Z

[INFO] – The user requested (constraint) pyopenssl==24.1.0

As a result I would then add pyOpenSSL==24.1.0 to the dags/requirements.txt file. In the end I had about 11 additional libraries in my dag/requirements.txt .

Don’t forget about your actual plugins

You may have actual custom plugins in a plugins/ directory. You’ll see in my pip download command I’m downloading them to a reqs/ directory. This is so I can:

- download all the WHL files into

reqs/ - copy contents of my custom plugins from

plugins/intoreqs/ - Zip contents of

reqs/intoplugins.zipfor upload.

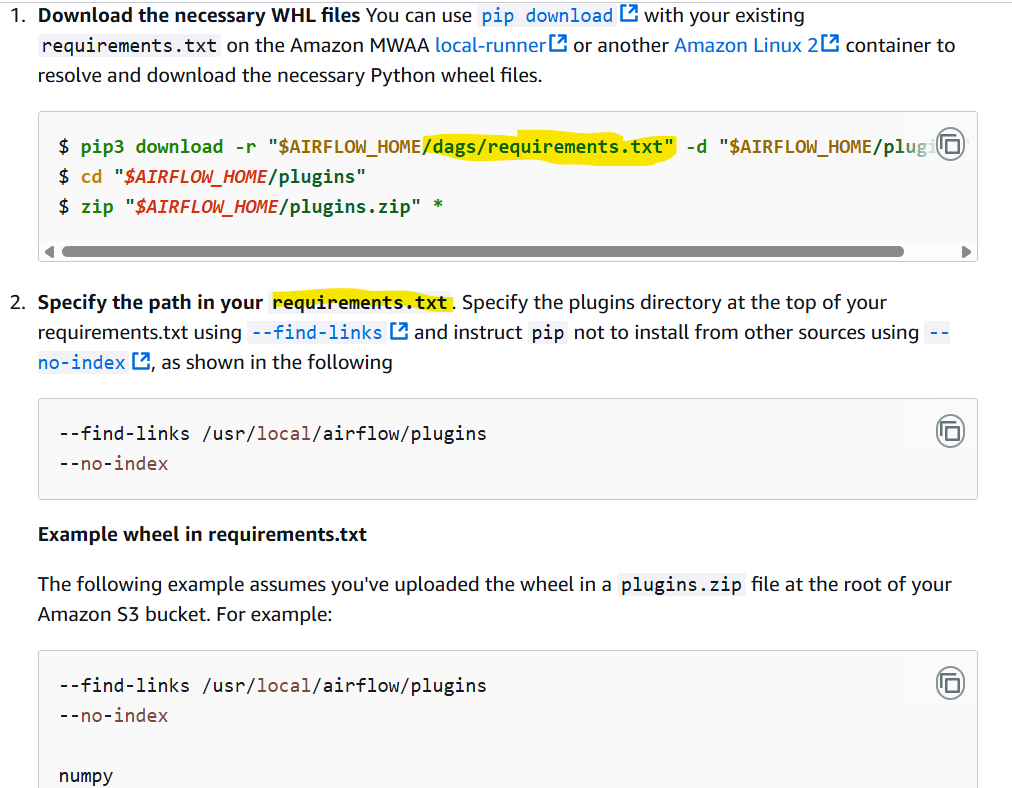

MWAA requirements.txt

The requirements.txt file you’ll specify for Airflow is just to force it to use your archive of source WHL files. You don’t have to specify versions, and may only need to specify the original set of libraries you need in Airflow (and not every one you needed in the dags/requirements.txt to get all the WHL files).

--find-links /usr/local/airflow/plugins

--no-index

apache-airflow-providers-snowflake==5.5.1

...